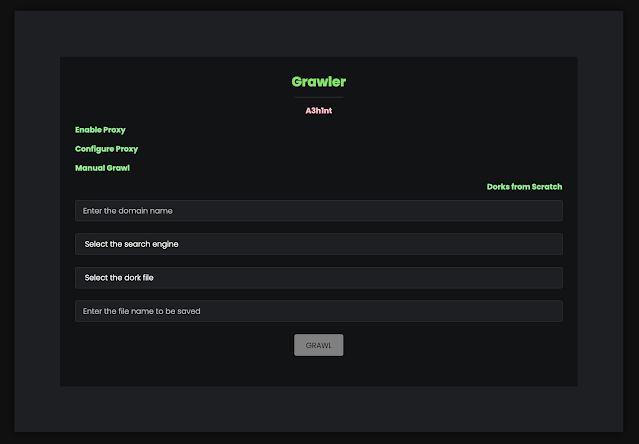

Grawler – Tool Which Comes With A Web Interface That Automates The Task Of Using Google Dorks, Scrapes The Results, And Stores Them In A File

Grawler is a resource created in PHP which comes with a web interface that automates the process of employing google dorks, scrapes the outcomes, and merchants them in a file.

Typical information

Grawler aims to automate the task of making use of google dorks with a website interface, the primary plan is to offer a very simple but potent tool which can be applied by everyone, Grawler arrives pre-loaded with the adhering to capabilities.

Options

- Supports many look for engines (Google, Yahoo, Bing)

- Comes with data files that contains dorks which are classified in 3 groups as of now.

- Filetypes

- Login Internet pages

- SQL Injections

- My_dorks (This file is deliberately left blank for customers to increase their own record of dorks)

- Arrives with its have manual to study the art of google dorks.

- Crafted-in element to use proxy (just in case google blocks you)

- Saves all the scraped URL’s in a one file (identify demands to be specified in the input field with extension .txt).

- Grawler can run in four different modes

- Automated method: Where by the Grawler works by using dorks existing in a file and merchants the result.

- Computerized method with proxy enabled

- Handbook manner: This mode is for users who only want to examination a one dork in opposition to a area.

- Handbook mode with proxy enabled

Setup

- Down load the ZIP file

- Down load XAMPP server

- Go the folder to htdocs folder in XAMPP

- Navigate to http://localhost/grawler

Demo

- How to operate Grawler?

- There are a number of methods to use proxy , i have individually made use of Scrapeapi mainly because they give free API calls with out any credit card data or just about anything, and you can even get much more API calls for free of charge.

- How to operate Grawler in guide manner?

Sample Final result

Captcha Situation

At times google captcha can be an concern, due to the fact google rightfully detects the bot and attempts to block it, there are ways that I have currently attempted to keep away from captcha like :

- Working with distinctive consumer-agent headers and IP in a spherical-robin algorithm.

- It will work but it provides a great deal of rubbish URLs that are not of any use and in addition to that it really is also slow, so I taken out that characteristic.

- Utilizing free of charge proxy servers

- Cost-free proxy servers are also sluggish and they usually get blocked owing to the simple fact that a great deal of persons use these absolutely free proxy servers for scraping.

- Rest function

- This will work up to some extent so I have included it in the code.

- Tor Community

- Nope, isn’t going to operate each individual time I experimented with it, a stunning captcha was offered, so I taken out this features as well.

Answer

- The most effective resolution that I have identified is to signal up for a proxy support and use it, it gives excellent benefits with significantly less garbage URL’s but it can be sluggish from time to time.

- Use a VPN.

Lead

- Report Bugs

- Incorporate new functions and functionalities

- Add additional successful google dorks (which in fact operates)

- Workaround the captcha difficulty

- Make a docker impression(in essence get the job done on portability and usability)

- Recommendations

Speak to Me

You can contact me below A3h1nt regarding everything.